Pay attention to `worker_bin_path` and `cache_path` variables, they must be adapted to your setup. `cache_reserve_mb` and `cache_quota_mb` might have to be modified, depending on your needs.

#### Create `$CRYOSPARC_ROOT/curnagl_config/cluster_script.sh` Use your favourite editor to fill the file with the following content: ```shell #!/bin/bash #SBATCH --job-name=cryosparc_{{ project_uid }}_{{ job_uid }} #SBATCH --partition={{ "gpu" if num_gpu > 0 else "cpu" }} #SBATCH --time=12:00:00 #SBATCH --output={{ job_log_path_abs }} #SBATCH --error={{ job_log_path_abs }} #SBATCH --nodes=1 #SBATCH --mem={{ (ram_gb*1024*2)|int }}M #SBATCH --ntasks-per-node=1 #SBATCH --cpus-per-task={{ num_cpu }} #SBATCH --gres=gpu:{{ num_gpu }} #SBATCH --gres-flags=enforce-binding module load cuda available_devs="" for devidx in $(seq 1 16); do if [[ -z $(nvidia-smi -i $devidx --query-compute-apps=pid --format=csv,noheader) ]] ; then if [[ -z "$available_devs" ]] ; then available_devs=$devidx else available_devs=$available_devs,$devidx fi fi done export CUDA_VISIBLE_DEVICES=$available_devs srun {{ run_cmd }} ``` #### Install CryoSPARC master ``` cd $CRYOSPARC_ROOT/cryosparc_master ./install.sh --license $LICENSE_ID --hostname curnagl --dbpath $CRYOSPARC_ROOT/database --port $CRYOSPARC_PORT ```At the end of the installation process, the installer asks you if you want to modify your `~/.bashrc` file, please answer yes.

#### Start CryoSPARC and create a user ``` export PATH=$CRYOSPARC_ROOT/cryosparc_master/bin:$PATH cryosparcm start cryosparcm createuser --email "ursula.lambda@unil.ch" --password "ursulabestpassword" --username "ulambda" --firstname "Ursula" --lastname "Lambda" ```Of course, when creating the user, you have to use appropriate information, the password shouldn't be your UNIL password.

#### Install CryoSPARC worker First you have to connect to a GPU node: ```shell Sinteractive -G1 -m8G ``` Once you are connected to the node: ```shell export LICENSE_ID="235e3142-d2b0-17eb-c43a-9c2461c1234d" export CRYOSPARC_ROOT=/work/FAC/FBM/DMF/ulambda/cryosparc module load cuda cd $CRYOSPARC_ROOT/cryosparc_worker ./install.sh --license $LICENSE_ID --cudapath $CUDA_HOME ``` At the end of the process, you can logout. #### Configure the cluster workers ```shell cd $CRYOSPARC_ROOT/curnagl_config cryosparcm cluster connect ``` ## 4. Connection to the web interface You have to create a tunnel from your laptop to the Curnagl login node: ```shell ssh -N -L 8080:localhost:45678 ulambda@curnagl.dcsr.unil.ch ```Please note that the port 45678 **must** be modified according to the one that DCSR gave you, and ulambda **must** be replaced with your UNIL login.

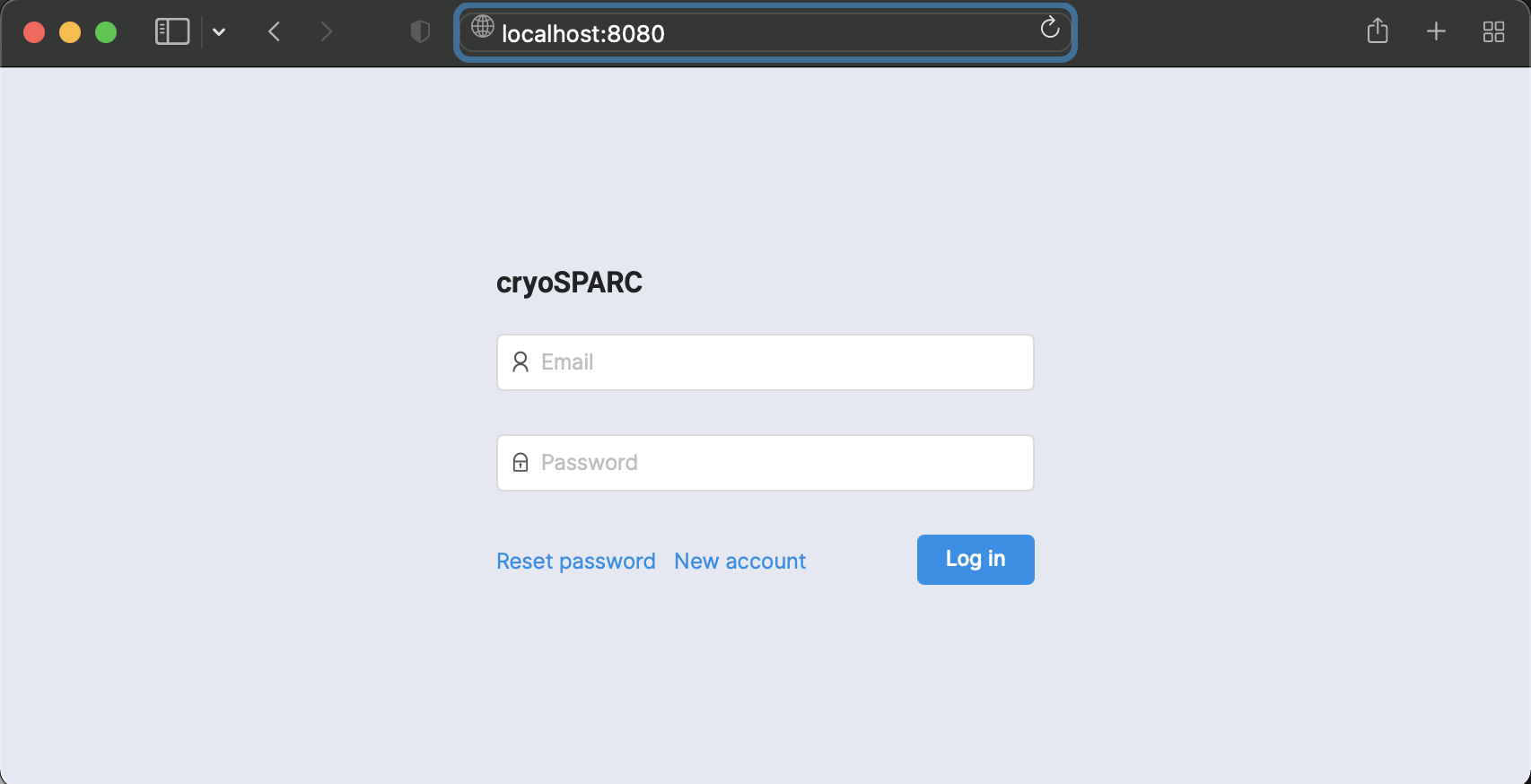

Then you can open a Web browser the following address: [http://localhost:8080](http://localhost:8080). [](https://wiki.unil.ch/ci/uploads/images/gallery/2022-01/image-1643304261513.png) Here you have to use the credentials defined when you created a user. ## 5. Working with CryoSPARC When you start working with CryoSPARC on Curnagl, you have to start it from the login node: ```shell cryosparcm start ``` When you have finished, you should stop CryoSPARC in order to avoid wasting resources on Curnagl login node: ```shell cryosparcm stop ```